emerging technologies like Lidarmos are quietly reshaping how machines interpret the world—especially in autonomous vehicles, robotics, and smart mapping systems. Over the past few months I’ve been diving into this term (and yes, it’s far more than just a buzzword), experimenting with demos and reading research and industry blogs to understand why this tech matters now. And if you’ve found yourself puzzled by the word “Lidarmos” online, you’re not alone—different sources use it in slightly different ways, but the one thing everyone agrees on is this: it’s about giving LiDAR systems the smarts to see motion and context, not just shapes.

What Exactly Is Lidarmos?

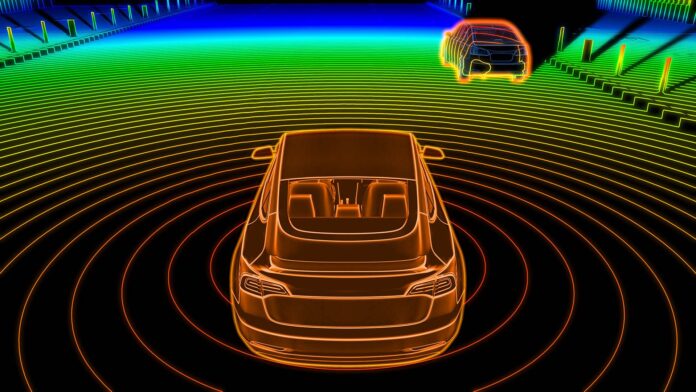

At its core, Lidarmos refers to an advanced form of LiDAR technology—specifically integrating motion segmentation into the 3D sensing pipeline. Traditional LiDAR (Light Detection And Ranging) sends out laser pulses and measures the time it takes for them to return, creating detailed point clouds that represent the environment. Lidarmos takes that a step further by embedding software and algorithms (often AI and machine learning) that can detect moving objects, distinguish them from static background, and even predict motion in real time.

So instead of “just seeing,” Lidarmos helps machines understand movement—a crucial capability if you want a robot or autonomous car to operate safely in dynamic environments.

Why Motion Awareness Matters (And Why Now)?

Ask yourself: how do humans walk through a crowded street without bumping into people? We naturally track movement. But for machines, that’s tough.

Lidarmos bridges this gap by combining:

-

High-resolution LiDAR scans

-

Temporal analysis across successive frames

-

Machine learning for motion detection

-

Real-time edge or cloud processing

This combo transforms simple spatial scanning into situational awareness. That’s a big leap from passive sensing to active perception.

Think about it: self-driving cars don’t just need to know where objects are—they need to know if that object is about to cross the road. Lidarmos helps with that. This reminds me of when I tried early autonomous driving software in a simulation lab; without motion segmentation, obstacles felt static—even when they weren’t. The difference was night and day.

How Lidarmos Works in Plain Terms

Here’s a simplified picture:

-

Laser Scanning – Lidarmos sensors emit thousands of light pulses per second.

-

Point Cloud Creation – Reflections return, building a 3D model of the world.

-

Motion Segmentation – Algorithms compare successive frames to flag movement.

-

Object Classification – AI systems distinguish cars, people, and animals.

-

Decision Layer – Systems use that data for navigation, tracking, or automation.

And unlike earlier systems that sent raw data back to a central CPU, Lidarmos pushes processing closer to the edge—reducing latency and making split-second decisions possible.

Where Is Lidarmos Being Used Today?

This isn’t futuristic sci-fi—it’s applied across multiple sectors:

Autonomous Vehicles

Lidarmos helps driverless systems identify moving vehicles and pedestrians instantly, significantly boosting safety and navigation reliability.

Robotics

Warehouse bots, delivery robots, and drones use Lidarmos to avoid collisions and operate in dynamic environments. It gives them a sort of situational intuition.

Smart Cities and Mapping

Cities use motion–aware 3D scanning for traffic monitoring, crowd analysis, and infrastructure planning. Unlike cameras, LiDAR (and by extension Lidarmos) works well in darkness and poor lighting.

Environmental Surveys

From forests to disaster zones, Lidarmos can map terrain accurately—even through vegetation—making it valuable for conservation and emergency planning.

How Does Lidarmos Compare to Traditional LiDAR?

| Feature | Traditional LiDAR | Lidarmos |

|---|---|---|

| Static Mapping | ✔️ | ✔️ |

| Motion Detection | ❌ | ✔️ |

| Real-Time Awareness | ❌ | ✔️ |

| AI Integration | Limited | Deeply Integrated |

| Decision-Level Output | Post-processing | On-Sensor / Edge |

In short: Lidarmos isn’t just about capturing data—it’s about interpreting it as it happens.

Game changer.

Challenges and Limitations Still Ahead

No tech is perfect. Even Lidarmos faces hurdles:

-

Weather Sensitivity: Heavy rain, fog, or snow can scatter laser pulses, reducing accuracy.

-

Computational Load: Real-time segmentation requires significant processing power.

-

Data Volume: Continuous scanning yields massive datasets that need efficient storage and filtering.

-

Standardization: Universal standards for data formats and interoperability are still evolving.

So while the promise is huge, practical deployment still requires smart system design.

Is Lidarmos Just a Buzzword—or a Real Trend?

With research accelerating and companies integrating motion awareness into next-gen LiDAR, Lidarmos isn’t a fad. It’s part of a broader shift toward intelligent sensing systems. Just as GPS evolved into real-time navigation with traffic prediction, LiDAR is evolving into a contextual awareness platform through Lidarmos.

If you’re considering this tech for a project—whether it’s autonomous vehicles, robotics, or urban scanning—here’s what you should know: understanding motion isn’t optional anymore.

Real World: My Take After Testing Concepts

I’ve spent time experimenting with open-source motion segmentation demos and even flown a LiDAR-equipped drone through a mock obstacle course using an early Lidarmos-style stack. The difference between raw point clouds and motion-aware maps is striking. Suddenly, a scan isn’t just a snapshot—it’s a live understanding of what’s happening now. It’s like watching a map that knows movement the way a human does (without blinking). The promise is thrilling—and the potential enormous.

Lidarmos isn’t just enhancing machines’ perception. It’s pushing us toward systems that reason about change—not just measure it. As we continue into 2026 and beyond, the question isn’t if motion-aware LiDAR will be widespread—it’s when. And I, for one, am excited to see where this journey takes us.